Why Did JetBrains Create Mellum?

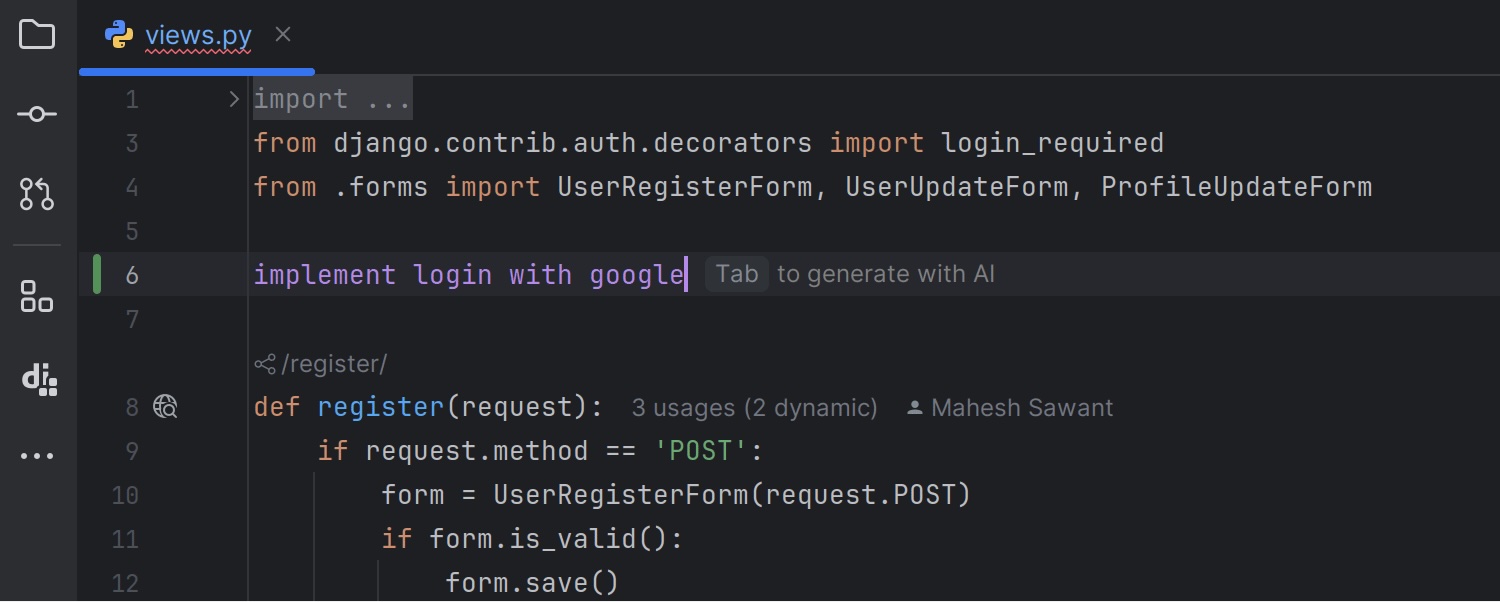

Mellum is a purpose-built language model trained from scratch to do one job well: code completion.

❓Why did JetBrains create a purpose-built LLM?

Because not every model needs to be a generalist.

Mellum is a purpose-built language model trained from scratch to do one job well: code completion. It’s fast, lightweight, and focused, prioritizing depth over breadth.

🔎 It's what we're calling a “focal model”.

And this is just the beginning. Mellum is the first in a growing family of focal models where each is designed around a specific developer task, from code completion to diff prediction and beyond.

🤗 And now, we’ve open-sourced Mellum on Hugging Face to invite transparency, collaboration, and contribution.

Researchers, engineers, and curious developers like you can explore the model, fine-tune it, and help shape what comes next 🤝